Retrieval Augmented Generation: Enhancing Language Models with Contextual Knowledge

In the ever-evolving landscape of natural language processing (NLP) and artificial intelligence, one concept has recently emerged as a promising approach to enhance the capabilities of large language models (LLMs): Retrieval Augmented Generation (RAG). This innovative technique combines the power of LLMs with the contextual knowledge derived from preexisting data sources, enabling more informed and accurate responses to user queries.

In this blog post, we delve into the motivation behind RAG, its core principles, challenges, and advanced techniques to maximize its effectiveness.

Motivation behind Retrieval Augmented Generation

The motivation behind RAG stems from the realization that while LLMs, such as OpenAI's GPT models, excel at generating natural language responses based on their training data, they can further benefit from additional contextual information. By leveraging external data sources, such as documentation, articles, or knowledge bases, RAG aims to enhance the relevance and accuracy of generated responses. This augmentation not only expands the scope of information accessible to LLMs but also enables them to provide more nuanced and contextually relevant answers.

Main Idea: How Retrieval Augmented Generation Works

Ingest Data

The first step in implementing RAG is to ingest and preprocess the external data sources. This typically involves loading the documentation or relevant content and transforming it into a format that is compatible with the retrieval process. One crucial concept in this step is the notion of embeddings.

Embeddings

An embedding is a numerical representation of text data that captures its semantic meaning. In the context of RAG, each document or piece of content is transformed into an embedding vector, which encodes its contextual information in a high-dimensional space. This transformation enables efficient comparison and retrieval of relevant content based on semantic similarity.

Once the data has been transformed into embeddings, it is indexed to facilitate fast and effective retrieval during query processing.

Query Your Data

When a user submits a query to the system, RAG transforms the natural language query into its corresponding embedding vector. This vector representation is then compared against the indexed embeddings of the stored data to identify relevant content. The retrieved content is then used to augment the LLM's knowledge base, providing additional context for generating the response.

Augment LLM with Retrieved Content

The augmented content is fed into the LLM, instructing it to consider the retrieved information when generating the response. By incorporating contextual knowledge from external sources, the LLM can produce more accurate and informed answers that align with the user's query.

Challenges in Implementing RAG

While RAG offers significant potential for enhancing the capabilities of LLMs, its implementation poses several challenges that must be addressed:

Difference in Formats

External data sources often come in various formats, including plain text, documents (e.g., .doc, .pdf), and structured data. Handling these diverse formats requires robust preprocessing techniques to ensure compatibility with the retrieval and augmentation process.

Document Splitting

Documents may contain complex structures, such as headings, paragraphs, and embedded content like code snippets or images. Splitting documents into smaller, meaningful chunks while preserving the relationships among them poses a challenge in RAG implementation.

Metadata Sensitivity

Metadata associated with the external data, such as tags, categories, or timestamps, can significantly impact the relevance and accuracy of retrieval. Ensuring the effective utilization of metadata without introducing bias or noise is essential for the success of RAG.

Advanced Techniques to Increase Accuracy

To overcome the challenges associated with RAG implementation and maximize its effectiveness, several advanced techniques can be employed:

- Dense & Sparse Embeddings: Using a combination of dense and sparse embeddings can enhance the representation of data. Dense embeddings capture semantic relationships between words, while sparse embeddings represent specific features that might not be captured by dense embeddings.

- RAG Fusion: Generate similar queries based on the user query and retrieve all likely content using RAG Fusion. This approach can help account for synonymous or related terms that may impact the retrieval process, ensuring that the system considers a broader range of potential answers.

- Use HyDE (Hypothesis-driven Document Retrieval): HyDE is a technique where the LLM generates a hypothetical answer to the user query, and this generated response is then used instead of the original query to retrieve likely content. This method can help capture more nuanced aspects of the input query that may not be readily apparent in its initial formulation.

- Rerank Retrieved Results: Reranking retrieved results with a Cross-Encoder can improve the accuracy and relevance of the data returned by the system. A Cross-Encoder computes a score that reflects both the query-document similarity and the document-document similarity to select the most relevant documents from the result set.

- Fine-Tune Your Model: Training your model with tailored QA (Question-Answer) pairs can further enhance its performance by providing it with specific domain knowledge and contextual understanding. This fine-tuning process enables the LLM to generate more accurate, detailed, and contextually relevant answers to queries that are specific to a particular domain or subject matter.

Conclusion

Retrieval Augmented Generation represents a powerful paradigm for enhancing the capabilities of large language models by incorporating contextual knowledge from external data sources. By ingesting, querying, and augmenting data, RAG enables LLMs to generate more accurate and informed responses to user queries. Despite the challenges involved, advanced techniques such as dense embeddings, RAG Fusion, and fine-tuning offer promising solutions to maximize the effectiveness of RAG in real-world applications. As research in this area continues to evolve, RAG holds the potential to revolutionize the way we interact with and leverage natural language processing technologies for searching information.

Related articles

Pedro Reis, Simon Truckenmüller

Accessibility Isn’t a Barrier. It’s a Bridge.

At Mercedes-Benz.io, we often talk about building meaningful experiences. We pride ourselves on designing user journeys that feel intuitive, purposeful, and, most of all, human. But for those experiences to truly matter, they need to be\naccessible. To everyone.

Apr 22, 2025

Guilherme Santos

Unlocking the Power of Bidirectional Playwright for Test Automation

In the fast-paced world of test automation, staying ahead means leveraging the best tools and technologies. One such advancement is Bidirectional Communication in Playwright, a game-changer that enhances real-time browser interaction and opens new possibilities for automated testing.

Apr 11, 2025

Cláudia Turpin

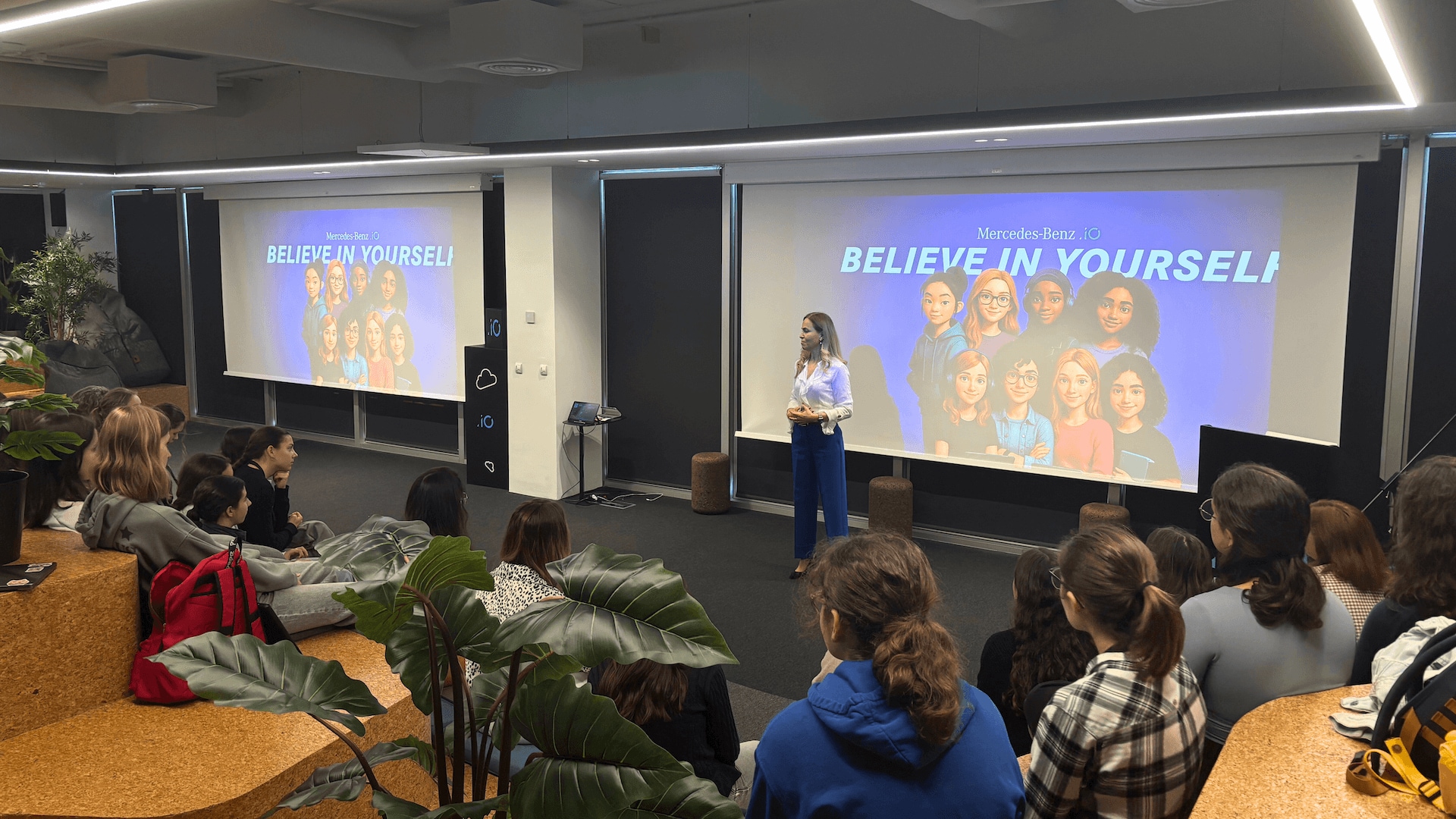

Girls' Day: Inspiring the Next Generation of Women in STEM

Every year, Girls' Day serves as a powerful reminder of the importance of encouraging young women to pursue careers in science, technology, engineering, and mathematics (STEM). Originating in Germany over two decades ago, this initiative\nhas grown into an international movement, empowering girls to explore opportunities in traditionally male-dominated\nfields.

Apr 9, 2025