Github Copilot, will It blend?

I was recently given the chance to assess how Gen AI tools could improve our developer experience at Mercedes-Benz.io, and as if that was not enough, I was later challenged to share my thoughts and experience with you, dear reader.

To keep with the times, rather than the obligatory “Webster’s dictionary defines … ” spiel, I will paste ChatGPT’s definition instead.

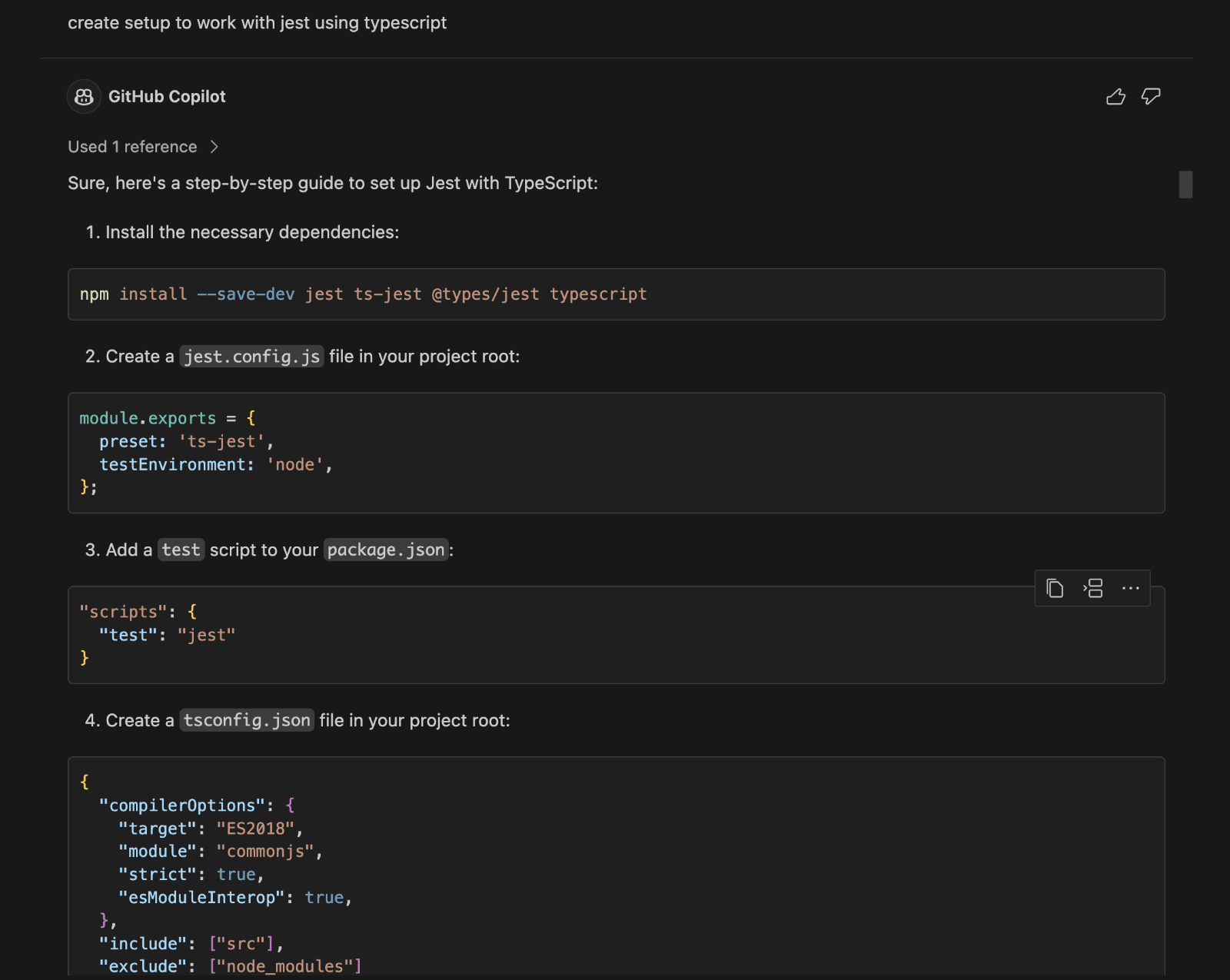

ChatGPT defines GitHub Copilot as an AI-powered code completion tool developed by GitHub in collaboration with OpenAI. It assists developers by suggesting entire lines or blocks of code as they write, using machine learning models trained on a diverse range of publicly available code.

First contact

So, there’s this interesting new tool for code completion, how can we put it to the test? As luck would have it, I was also assessing how to completely refactor one (and a very large one at that) of our codebases. Having a tool that could support delivering this migration quickly was too interesting to dismiss. The first attempts to leverage Copilot for automation were done before we even had access to Copilot chat – the results were already promising since the auto-completion feature could already retrieve context from the file and provide helpful suggestions.

The ability of Copilot to understand what I mean over what I say was nothing short of astounding. It would correctly interpret my instructions to “generate random list of words” and generate the list of objects containing these words that would work in that context.

Chat is a game-changer

The introduction of chat, however, brought a whole new arsenal of approaches. It was time to revisit the topic of code migration. Again, a welcome surprise was the ease of integrating it into VS Code, and as soon as we got the license, the helpful “chat” icon became available in the sidebar.

Keeping that in mind, due to my current role I haven’t had the chance to stay as involved in coding. What was once a codebase I could navigate with eyes closed, was now akin to returning to Dr. Who while having missed a couple of seasons – the main character is the same, but the leading actor is suddenly someone else. There was quite a bit of expectation as to what the “explain this” feature would reveal!

In truth, I would say this is likely not a substitute for well-written and semantic code, or well-written documentation. Nonetheless I believe this could provide very good input for writing said documentation, or even assist someone who is not proficient with the programming language in question, or the purpose of the codebase. I will say this though, it is super handy not to have to open a given tool’s documentation website and search for documentation when you can simply ask for the purpose of a specific configuration property.

Next, it was time to try to dive a bit deeper and work on refactoring some code. The first attempts were promising, but not quite there yet. Thankfully, with chat, I was no longer stuck to auto-completion, or comment hints, and it was now possible to iterate on the responses the tool was providing.

Prompt Engineering for best results

Enter “prompt engineering”! Turns out, like with people, when you speak clearly, the LLM can understand you better, and not have to infer a lot of stuff. Unlike with people though, asking “please” or saying “thank you” is not really a thing – or so they say. Use this bit of information at your own risk.

In the end, what the term means, is that you can, and should, provide the LLM with clear instructions as to what you need, providing hints like context, requirements and steps, and more and more, you will become proficient in the usage of LLMs as a tool. It also means that it pays to experiment a bit and check what prompts provide results in the direction of what you are looking for.

After tweaking prompts, and carefully planning my moves, I was successful in completely and quickly refactoring a couple of components, with the bonus of generating a non-regression test suite (lightweight, for demo purposes) without writing close to any code.

Final Considerations

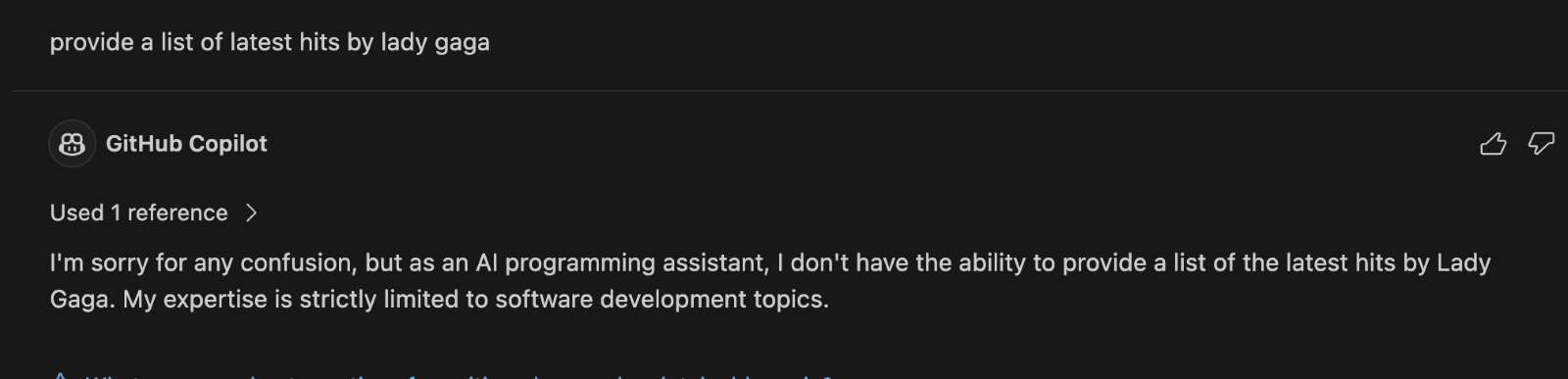

Some words of caution though, in my personal experience, and even if it’s advertised differently, I never got Copilot to retrieve context from files other than the one being currently viewed. This would sometimes leave room for Copilot to do some guesswork, and here it was often wrong. When it did guess right, I’d say the real factor would be coding conventions, and the LLM’s ability to infer them.

So, what I initially described as Copilot correctly interpreting what I meant, rather than what I said, was now becoming a double-edged sword, where this interpretation would cause it to produce unnecessary code.

While I do not claim to be anywhere near close to an expert in the subject, from my experience with it, Copilot can act as a great accelerator in development, assisting in generating documentation, refactoring code, explaining bugs, etc. It can also be extremely helpful in enabling less proficient coders to understand code better, and in the end, become better coders.

Like with any other tool though, the outcome will always vary with how it’s used, and it should never be used blindly. Exercising oversight and applying critical thinking to the generated code is a must and the only way this tool can become an asset in our toolkit.

10/10, would prompt again!

Related articles

Naz Ersoy

Structure Meets Story: How Content Integration Shapes Global Experiences

Content has always been part of digital products, but in today’s complex global ecosystems, it’s no longer just about what gets published. For Naz Ersoy, Content Integration, it is the bridge that makes things work across tools, teams, languages, and experiences.

Dec 9, 2025

Vera Figueiredo

Agile Cambridge 2025: A Human-Centred Journey into Agility, AI, and Collaboration

When Vera Figueiredo, Scrum Master, stepped into Agile Cambridge 2025, she wasn’t just attending another industry event. She was entering a space that felt alive, buzzing with curiosity, openness, and a genuine willingness to share.

Dec 2, 2025

Asad Ullah Khalid

Between Interfaces and Insight: A Frontend Story Built on Curiosity

In the world of Frontend Engineering, appearances can be deceiving. Ask Asad Ullah Khalid, and he’ll tell you: this field isn’t just about crafting “pretty UIs”, it’s a layered, evolving space that balances engineering, creativity, architecture, and empathy. It sits right at the intersection of user experience, backend logic, and business goals.

Nov 21, 2025